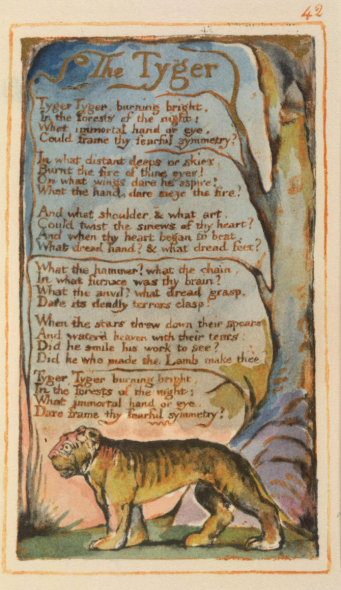

Peter Fenzel, Mark Lee, and Matthew Wrather remember their favorite moments of the 2016 Olympics in Rio, wonder what Jon Snow is doing in an Infinity commercial, deliver a solid undergraduate poetry survey lecture on meter and prosody, and delve in to the sexy-dangerous capital-R Romanticism of William Blake’s The Tyger.

→ Download the Overthinking It Podcast (MP3)

Subscribe in iTunes

Subscribe with RSS

Tell us what you think!

Email us

(203) 285-6401 call/text

Another instance of someone reading a poem at an ever increasing tempo is a Penn and Teller routine where Teller is straitjacketed and suspended while Penn Jillette sits on a chair which is keeping Teller from falling to his doom.

Penn starts to read “Casey at Bat” with the understanding that he will stand up when he finishes the poem. At first the reading is at a normal cadence but then gets more and more frentic the closer Teller gets to escaping the straitjacket.

https://www.youtube.com/watch?v=reY68mDYFKk

I agree that pieces in the self-driving-car-trolley-problems genre typically attribute much more perfect information to the car’s computer brain than is realistic. But isn’t part of the point of a self-driving car that it won’t have the same blind spots that we do? You can put cameras all over it, not just at eye level pointing forward, plus the cars can also communicate their locations to each other.

Yeah, they don’t have the same blind spots we do, but they have different blind spots, even with full 360 degree roof-mounted lidar systems (which are very expensive and most people will never have).

In particular so far they seem to be bad with rain, shadows, unexpected lane changing, cars and trucks of varying heights, and cars coming at them from the side when they have decided they have right of way.

The cars communicating with each other is trickier, because it’s as much an economic question as a technological one – are the tech companies going to be willing to commoditize this technology, lower their profit margins enough and offer robust enough support even to low-end customers that working autonomous systems will be affordable and easy to keep running well to everybody who needs to use a car.

This is particularly tricky when you consider that the average car on American roads is 11 years old, and the average price of a used car in the U.S. is about $8,500 – that is, about 1/20th the price of one semi-autonomous Uber Volvo XC90 on the streets of Pittsburgh.

Also, by the way, it’s worth considering that the mechanical parts of the self-driving car might not always perform predictably in all cases.

For example, consider this trolley problem variation:

A car is going straight at three people 150 feet away at 80 miles an hour. It has decided that if it applies the brakes, there is an 80% chance it won’t be able to stop before it crosses into the place where the pedestrians currently are (this will change with the weather, the current temperature of the brakes and the tire rubber, the level of wear on the tread of the tires, the surface, etc.). It assumes that each person has a 20% chance to move out of the way, and a 60% chance per person hit of causing serious injury.

It is on a four-lane, two-way highway with no median, which has people crossing it, but no traffic lights, the left-hand lane terminates in 120 feet at a railing at an angle to the approach angle of the car. The car is evaluating the choice of merging into the left lane and driving squarely into and perhaps through the railing, which has a 90% chance of causing serious or fatal injury to the driver of the car.

In this world, 60% of cars currently on the road have some form of autonomous driving systems, and 30% of the cars on the road are capable of communicating with other autonomous cars.

The car sensor tells you there is no car coming from behind you in the left lane. It can predict this with 85% accuracy. The car communicator is getting no signal from a car.

The car is unable to model what the effect would be to other cars, to pedestrians, or to surrounding property of hitting the railing or of applying the brakes forcefully while merging left and attempting not to hit the railing squarely, because there is not equipment in existence that can at that speed calculate the angle of the railing and all the effects of the collision.

Now, the self-driving car can calculate all the probabilities and make a decision – and we could constantly update those probabilities with new data to optimize the reaction to this situation (is the expected value of trying to stop while driving at the three people greater than the expected value of driving into the railing).

More likely than that is the self-driving car can fake competence by having a statistical database of greatly simplified similar situations, with a decision made based on that rather than on the car’s instruments and onboard processing – this is what they’re trying to do in Pittsburgh – not make a car capable of driving in Pittsburgh based on what it can see in real time, but making a very detailed map that the car can refer to in real time, and having a person on board to correct any discrepancies with the map.

Personally, I think the safest, best course of action is probably for the car to behave predictably rather than optimally – this will minimize the chance the car will cause additional damage because of factors it can’t take into account (most notably cars behind it that it can’t see and that don’t have working communications).

Basically for the car to have a standard “emergency stop” and to use that in virtually all situations – and to have it send out a simple signal to other self-driving cars nearby to also execute emergency stops – this signal would be easy to standardize across platforms and could be made compatible with lower-end collision warning and automatic braking systems in cars that are not equipped with full lidar setups.

In other words, don’t think of what decision the car can make as a singular ethical actor – think of what decision you can say in advance you want all the cars to make to reduce the risk of the entire system – and then that becomes a known factor rather than yet another unknown factor.

It is also worth considering that if kids know that cars will make complex ethical decisions if they think they will hurt a child that might cause the car to swerve wildly, but will make sure the car won’t hit them, kids will troll and prank these cars on streets in order to watch them drive into ditches or ponds – or just swerve around the road with their occupants unable to control them.

If you instead put in a standard emergency stopping distance, you can teach kids to stay out of the emergency stopping distance of cars, and any kid who does it is going to have less of a good time than they might otherwise have – and also less moral hazard.

Another unknown: the number of occupants of other cars on the road.

The hypothetical scenario I’ve seen presented a lot is this: the car knows, through weight sensors, how many occupants are on the car. It can guess, supposedly, how many pedestrians are at risk of a collision. And, if the number of pedestrians are greater than the number of car occupants, it will swerve, possibly fatally colliding with another entity (possibly another vehicle) to save the greater number of lives.

Which is a weird system, because it assumes the only two factors are car occupants and pedestrians. But if your colliding with another car with enough force to kill your own car’s occupants, it seems safe to assume the occupants of other vehicles may also be seriously injured or killed. So instead of, say, 3 car occupants > 4 pedestrians, swerve, we have 3 car occupants + X occupants of car we will collide with.

Personally, I love the idea of self-driving cars that can eliminate the human error factor, but I’d be frightened to be on the road with them (even in a non-self driving car) when there’s a chance that they might swerve into my vehicle and kill me, plus however many other occupants are in my car, based on bad math.

A “big summer tentpole,” is it?

I just wanted to say how much I loved this episode. More poetry lessons, please. When is Paradise Lost Book Club?